Exploring how to build a Self-Driving Car, step-by-step with Udacity!

Editor’s note: David Silver (Program Lead for Udacity’s Self-Driving Car Engineer Nanodegree program), continues his mission to write a new post for each of the 67 lessons currently in the program. We check in with him today as he introduces us to Lesson 5!

The 5th lesson of the Udacity Self-Driving Car Engineer Nanodegree Program is “MiniFlow.” Over the course of this lesson, students build their own neural network library, which we call MiniFlow.

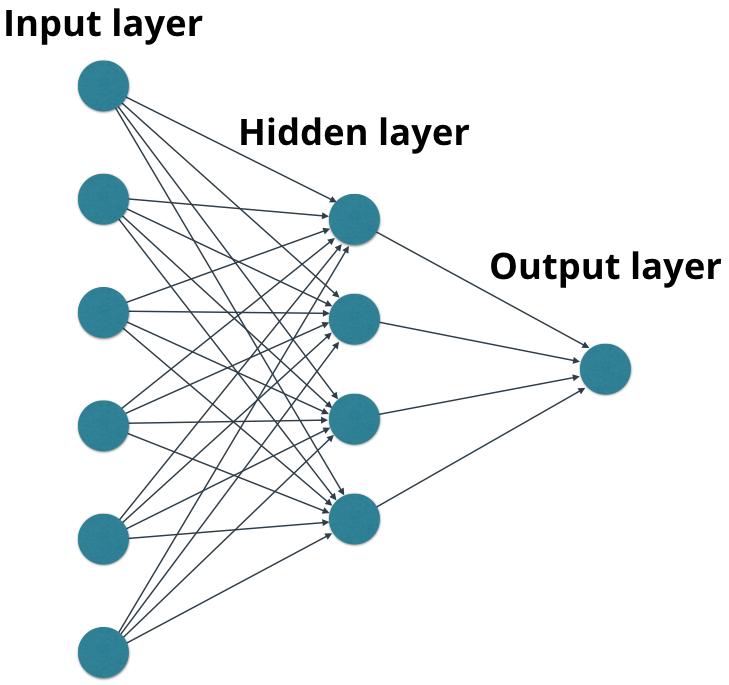

The lesson starts with a fairly basic, feedforward neural network, with just a few layers. Students learn to build the connections between the artificial neurons and implement forward propagation to move calculations through the network.

The real mind-bend comes in the “Linear Transform” concept, where we go from working with individual neurons to working with layers of neurons. Working with layers allows us to dramatically accelerate the calculations of the networks, because we can use matrix operations and their associated optimizations to represent the layers. Sometimes this is called vectorization, and it’s a key to why deep learning has become so successful.

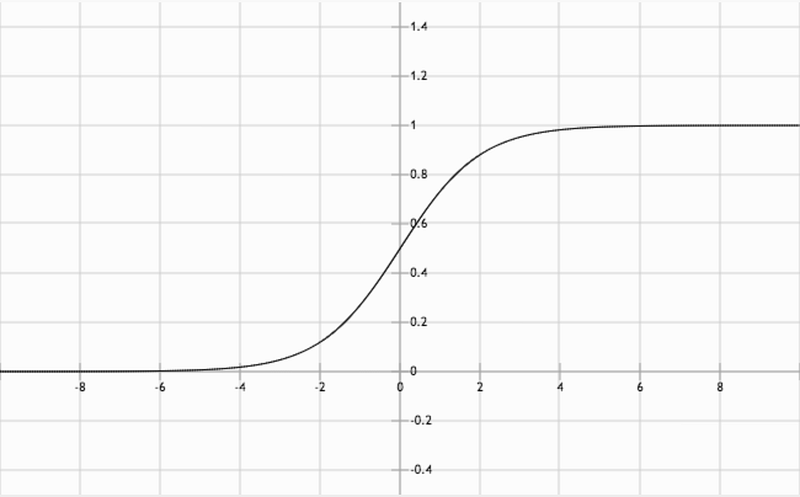

Once students implement layers in MiniFlow, they learn about a particular activation function: the sigmoid function. Activation functions define the extent to which each neuron is “on” or “off”. Sophisticated activation functions, like the sigmoid function, don’t have to be all the way “on” or “off”. They can hold a value somewhere along the activation function, between 0 and 1.

The next step is to train the network to better classify our data. For example, if we want the network to recognize handwriting, we need to adjust the weight associated with each neuron in order to achieve the correct classification. Students implement an optimization technique called gradient descent to determine how to adjust the weights of the network.

Finally, students implement backpropagation to relay those weight adjustments backwards through the networks, from finish to start. If we do this thousands of times, hopefully we’ll wind up with a trained, accurate network.

And once students have finished this lesson, they have their own Python library they can use to build as many neural networks as they want!

If all of that sounds interesting to you, maybe you should apply to join the Udacity Self-Driving Car Engineer Nanodegree Program and learn to become a Self-Driving Car Engineer!