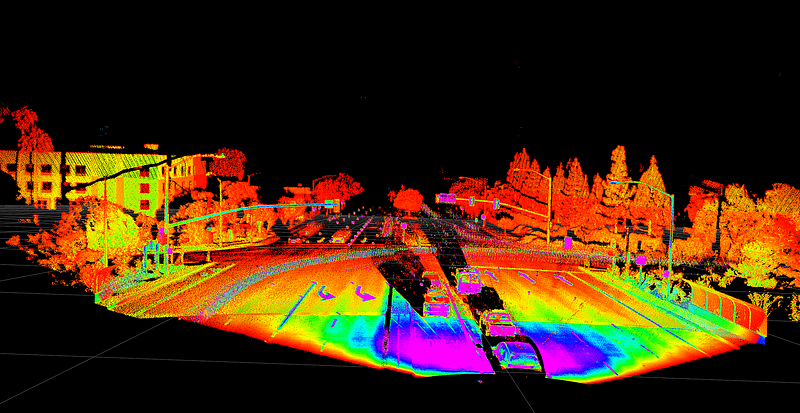

A lidar startup named Innovusion recently raised $30MM to deliver “image-quality” lidar. As optics.org points out, several different startups are racing to build laser-based autonomous vehicle sensors: Luminar, AEye, Quanergy, Oryx, Velodyne (the industry-standard), and surely a few others.

Credit to Innovusion, though: “image-quality lidar” really frames the issue in a way that I hadn’t seen before. Cost aside, is it really possible to replace cameras with lidar?

Watching the Innovusion demo video, the answer seems to be “closer, but not yet”. The quality of the lidar scan is terrific. However, “image quality” isn’t quite right. Signs appear in the video and are illegible, and at least to my eyes the traffic signal was not classifiable.

It’s exciting to see how much work is being done to push lidar resolution to a point that it is competitive with cameras.

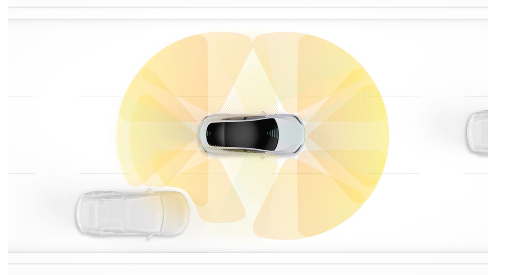

It would also be exciting to see cameras develop their measurement abilities to compete with lidar and radar. Generally, cameras are terrific for detection and classification tasks, but they measure distances, heights, and other dimensions poorly.

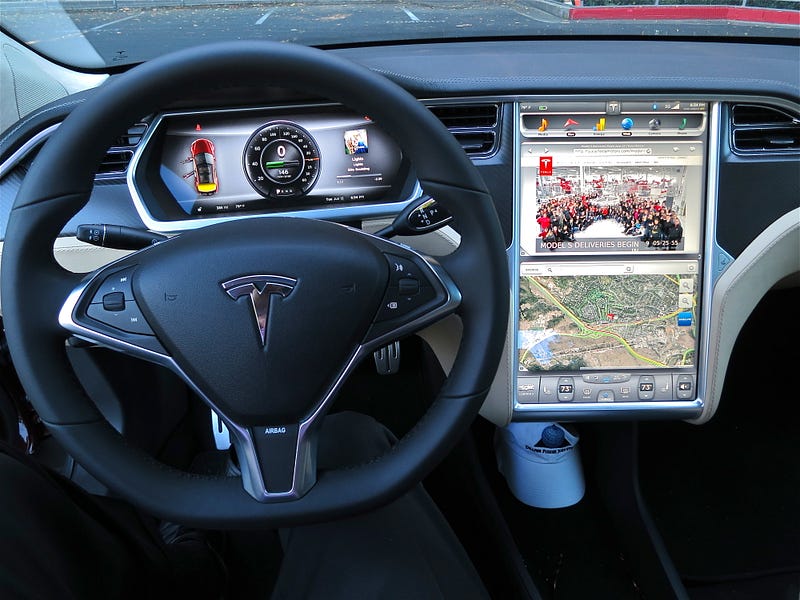

And while many startups are jumping into the lidar space, comparatively fewer are working on perception with cameras. That work tends to be left to academic researchers, automotive manufacturers, and Tier 1 suppliers. Mobileye, the most prominent automotive computer vision specialty company (now part of Intel), has mostly been quiet about their computer vision work.

With so many companies pushing lidar resolution to camera-like levels, there might be an opening for some computer vision startups to push camera measurements to lidar-like levels.