At CES, Udacity and Baidu jointly announced a free intro course for the Apollo self-driving platform!

Big news from CES! Udacity is going to produce a one-month free course on developing self-driving car software with Baidu Apollo! Sebastian Thrun, Udacity’s founder (and the father of the self-driving car), announced this together with Baidu COO Qi Lu at CES today.

Apollo

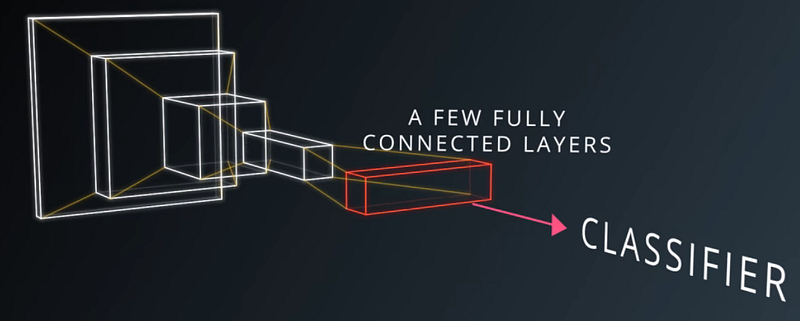

Baidu has open-sourced their self-driving car software stack, Apollo, with the goal of creating the “Android of the autonomous driving industry”.

- Cloud Service

- Apollo Open Software Stack

- Reference Hardware Platform

- Reference Vehicle Platform

Udacity’s upcoming “Intro to Apollo” course will focus on the top two layers: Cloud Service and Apollo Open Software Stack.

The Course

Apollo is an incredibly exciting platform in the autonomous vehicle industry. We are thrilled to work with the Apollo team to teach students and engineers around the world how to build self-driving car software quickly using the Apollo stack.

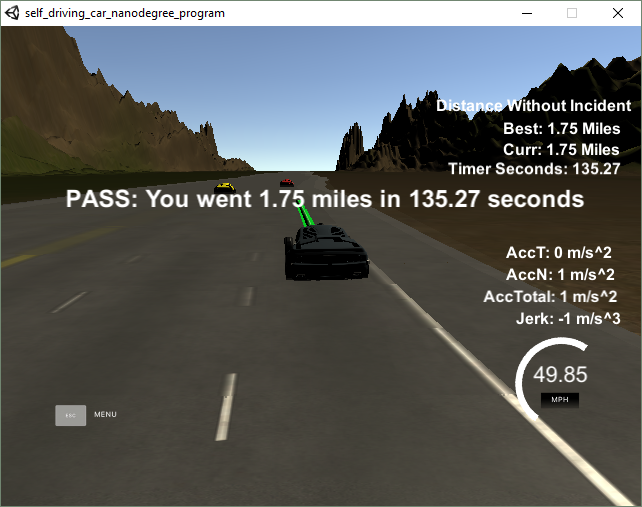

I am especially delighted that this will be a free course, open to anyone with the desire to enter this amazing field. There is a huge demand for knowledge about how self-driving cars work, and this course will help educate the world on this topic. Our Self-Driving Car Engineer Nanodegree Program is an intense nine-month journey to becoming a self-driving car engineer, and it offers an amazing learning experience, but it is for advanced engineers. And while our Intro to Self-Driving Cars Nanodegree program is an excellent point-of-entry for aspiring learners newer to the field, it offers an equally immersive experience. This course offers adds something new and important to the range of learning options.

China

This is a special opportunity for us to collaborate with Baidu, one of the leading companies in China. China is a leader in the autonomous vehicle industry. And Chinese students currently make up 5% of enrollment in Udacity’s Self-Driving Car Engineer Nanodegree Program, and 20% of enrollments in all Udacity programs. A major focus for our Self-Driving Car Program in 2018 is to reach even more students in China.

The course will be developed jointly by Baidu’s Apollo team, the Udacity Self-Driving Car team in Mountain View, and the Udacity China team. The course will be in English, but this is a new experiment for us in developing course material in one of our offices outside of the US. I’m excited.

Did I mention I’m excited about this course? Because I’m excited!