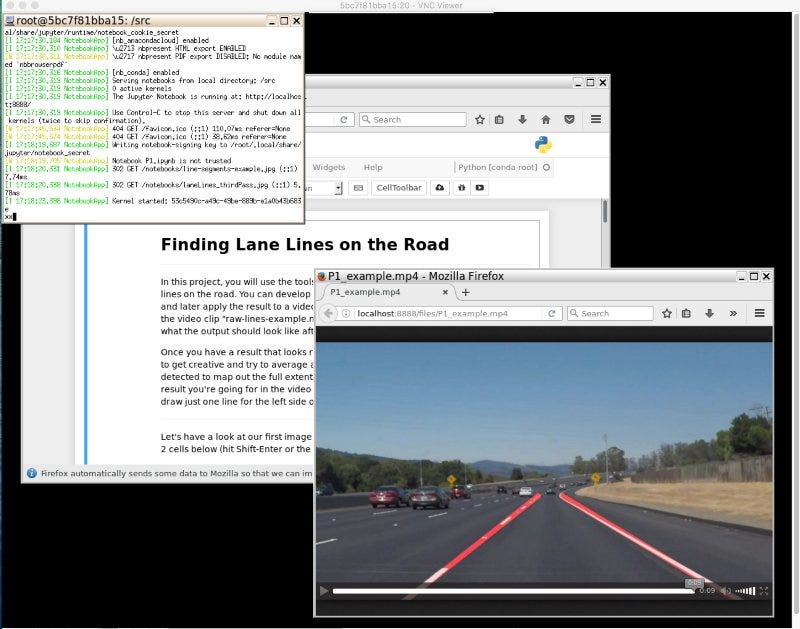

Today is my first day as a motion control engineer at Voyage. I’m so excited!

Voyage came into being years ago as part of Udacity. Oliver Cameron, Voyage’s co-founder and CEO, was my first manager at Udacity. The rest of Voyage’s founding team were my colleagues when I joined Udacity in 2016. I’m thrilled to join them again to work on self-driving cars.

Over the past four years, I have been so impressed by Voyage’s progress. They are now on their third-generation vehicle, and they are already testing a fully driverless autonomous stack.

My role at Voyage will be on the motion control team, which handles steering, acceleration, and deceleration. It’s the “act” part of the “sense-plan-act” robotics cycle. This should be a lot of fun!

One of the most attractive aspects of joining Voyage was the ability to make a big impact on a lot of different parts of the autonomy stack, and I hope to work on many different components over time. Keep an eye out!

In the meantime:

- Join me at Voyage!

- Check out Voyage in action 🙂