Want to get a job working on self-driving cars? Read on.

How I Landed My Dream Job Working On Self-driving Cars

The guiding star of the Udacity Self-Driving Car Nanodegree Program is to prepare students for jobs working on autonomous vehicles. So we were excited that Galen found his dream job working on autonomous vehicles for HERE in Boulder, Colorado. He also gives lots of credit to the Udacity, which is generous of him 🙂

“The private Slack channel for students is filled with a tangible excitement. I’ve never been a part of a such a large student body, let alone a student body that is committed to the success of every student (no grading curve here). Between Slack, the dedicated forums, and your own private mentor, there is no reason to be stuck on a problem — there are so many people willing to help answer your questions. Instead, you can focus on finding your own way to improve the foundations of the projects.”

Self-driving Cars — Deep neural networks and convolutional neural networks applied to clone driving behavior

Ricardo provides a thorough rundown of his Behavioral Cloning project, which runs on both simulators. He synthesized and built on the insights of earlier Udacity students:

“My first step was to evaluate the driver log steering histograms for 100 bins and do all required transformation, drop and augmentation to balance it. Here I followed the same methodology as in the well explained pre-processing from Mez Gebre, thanks Mez!”

Behavioral Cloning: Tiny Mistake Cost Me 15 days

Yazeed was struggling with the Behavioral Cloning Project when he realized he mixed up his colorspaces. Students on Slack pointed out that this might have been because I mixed up the colorspaces in some demo code. Oops!

“I spend about 15 days training my network over and over in the third project of the Self-Driving Cars Engineer NanoDegree by Udacity, it drives me crazy because it can’t keep itself on the road. I reviewed the code hundreds of times and nothing wrong with it. Telling you the truth, when I almost gave up, I read an article that has nothing to do with this project. It mentions that OpenCV read images as BGR (Blue, Green, Red) and guess what, the drive.py uses RGB. What The Hell I’m doing for 2 weeks !!!”

What i have learned from the first term of Udacity Self driving car nanodegree program.

Hadi went back and reviewed everything he learned during Term 1. He included all of his project videos, which are awesome!

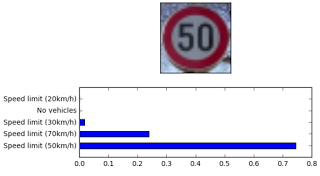

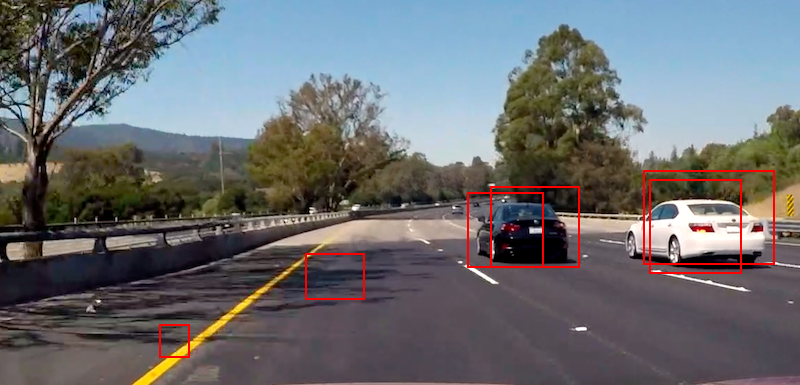

“The last and fifth project of the first term is to write a program to detect vehicles by drawing a bounding box on each detected vehicle. the project is done using a Support Vector Machine SVM that is a kind of classifier that is used to classify and differentiate between different classes. In this case, the classifier takes multiple features of images as inputs and learns to classify them into two classes, cars and non cars.”

Generating Faces with Deep Convolutional GANs

Dominic wrote up the generative adversarial network that he trained for the Udacity Deep Learning Nanodegree Foundations Program. Normally I downvote blog posts from other programs, but Dominic is a Self-Driving Car student and DLFND is great and GANs are very cool, so I’ll let it slide.

“The training took approximately 15–20 minutes and even though the first few iterations looked like something demonic — the end result was fine.”