Great posts by Udacity Self-Driving Car students on diverse topics! End-to-end deep neural networks, hacking a car, and the history of autonomy.

End-to-end learning for self-driving cars

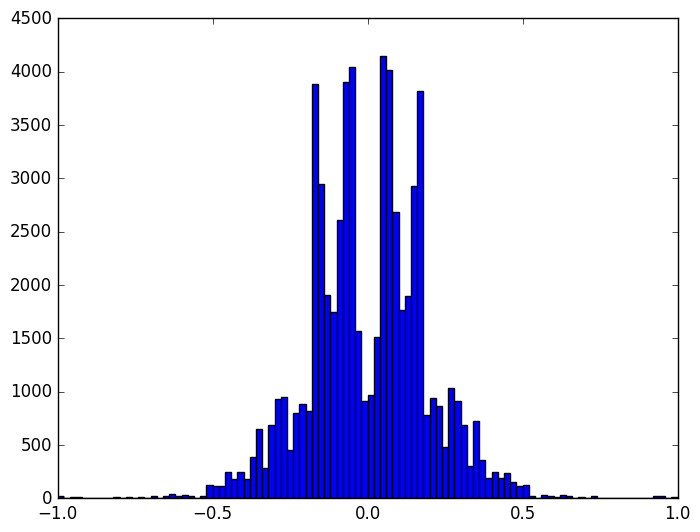

This is a concise, practical post detailing how Alex built his end-to-end network for driving a simulated vehicle. His discussion of balancing the dataset is particularly interesting.

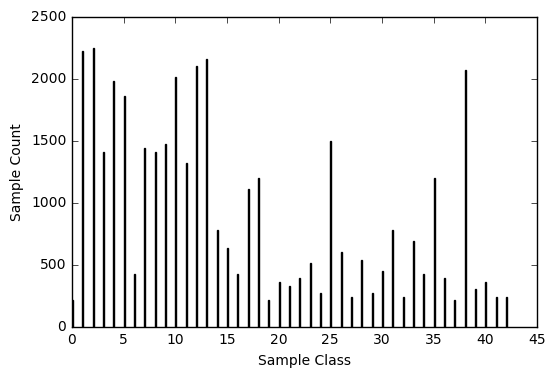

Just as one would expect, resulting dataset was extremely unbalanced and had a lot of examples with steering angles close to

0(e.g. when the wheel is “at rest” and not steering while driving in a straight line). So I applied a designated random sampling which ensured that the data is as balanced across steering angles as possible. This process included splitting steering angles intonbins and using at most200frames for each bin

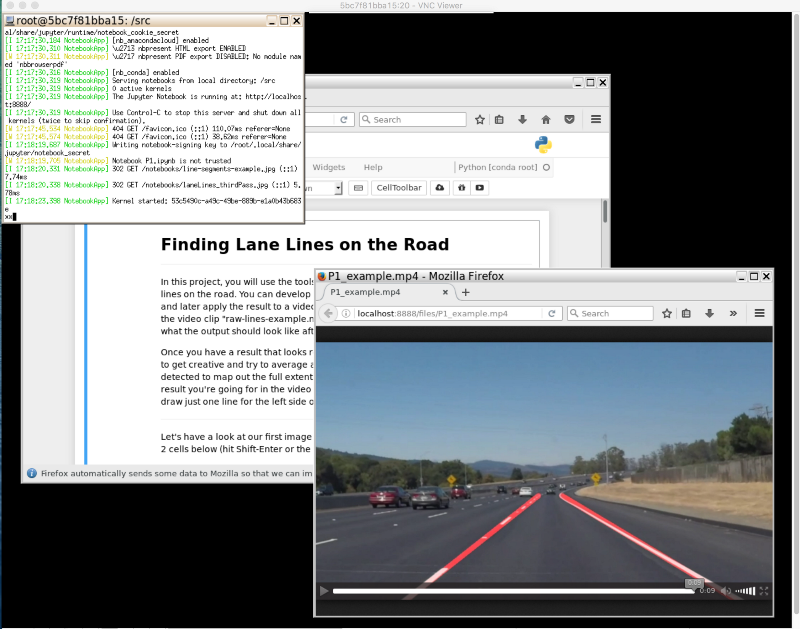

Jetson TX1 and ZED stereo camera warm up.

This is the latest in Dylan’s series on hacking his Subaru and turning it into a self-driving car. (This is not part of the Udacity program and we do not recommend this!) In this post, he unpacks his Jetson TX1 and gets the cameras do to some neat tricks.

The lighting conditions seem to make a difference with regard to depth accuracy. I’m excited to see how it performs outdoors. I plan to mount it just in front of my rear view mirror, where it will be mostly hidden from the driver’s field of view. I’m not sure about USB cable routing yet. It’s long enough to reach directly down to the dashboard, but I’d rather conceal it behind some interior panels.

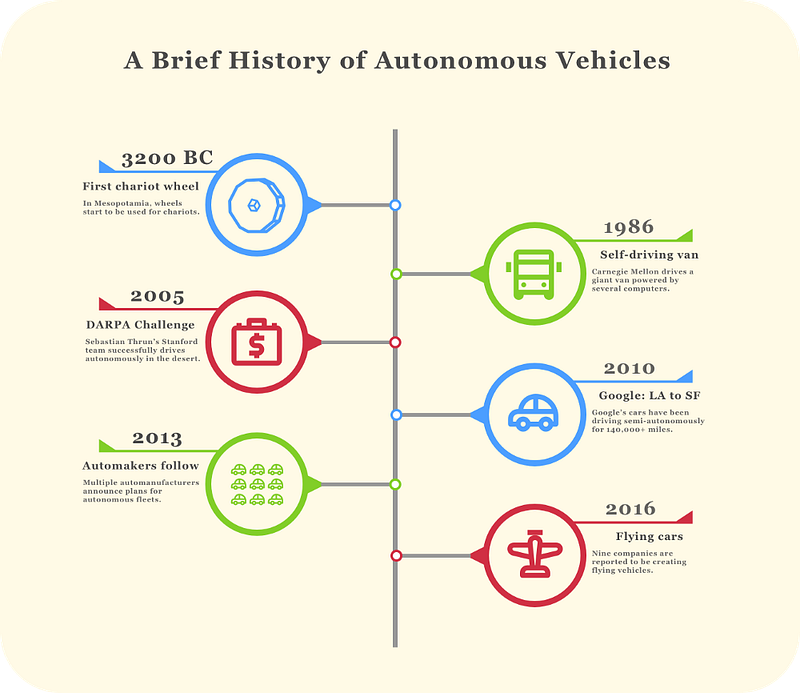

2017: The year for autonomous vehicles

This is a great historical summary of autonomy, starting with the wheel (really, starting with ALVINN) and going through current efforts at autonomous personal aircraft.

If you had come to this article 10 years ago, hardly anyone would have heard of autonomous cars, or thought them possible for that matter. Now, there are ~100 companies working on autonomous vehicles, dozens of which have already been operating semi-autonomous vehicles.