I really love this video that Lyft made with Udacity to introduce the Lyft Perception Challenge.

I neglected to post it yesterday, so here it is today.

If you’re a Udacity Self-Driving Car student, you should enter the challenge and go work at Lyft!

I really love this video that Lyft made with Udacity to introduce the Lyft Perception Challenge.

I neglected to post it yesterday, so here it is today.

If you’re a Udacity Self-Driving Car student, you should enter the challenge and go work at Lyft!

One of Udacity’s big initiatives this year is to build our alumni network. Udacity has tens of thousands of graduates around the globe, and our goal is to help our alumni advance their careers in whatever direction they choose.

To that end, the Udacity Alumni Network has a calendar full of career-focused online events coming up. Over the next month alone, our Careers & Alumni teams will host:

Check out the full calendar on Eventbrite 🙂

A few weeks ago, I had the delight of visiting Europe with Udacity’s Berlin-based European team, meeting both automotive partners and Udacity students. The trip was so much fun!

We started in Stuttgart, where we met with our partners at Bosch and toured their Abstatt campus. Their campus reminds me of a plush Silicon Valley office, except instead of overlooking Highway 101, they overlook vineyards and a European castle.

Thanks to Udacity student Tolga Mert for organizing!

In the evening, Bosch’s Mirko Franke joined us at the Connected Autonomous Driving Meetup, organized by Udacity student Rainer Bariess.

We discussed the self-driving ecosystem and, of course, how to get a job working on self-driving cars at Bosch.

The next day we headed to Berlin to prepare for our deep learning workshop at Automotive Tech.AD. What a great collection of autonomous vehicle engineers from companies across Europe!

In the evening we hosted a Meetup for current and prospective Udacity students at our Berlin office. It is always a delight to meet students and hear firsthand what they love about Udacity, and how they feel we can improve the student experience.

Our final stop was London, for an interview with Alan Martin at Alphr.

That evening, Udacity alumnus Brian Holt, Head of Autonomous Driving at Parkopedia, hosted us for at the London Self-Driving/Autonomous Car Technology Meetup. We had a blast talking about the future of self-driving (and even flying!) cars.

It’s a lot of fun to fly across the globe and see different places, but the best experience of all is getting to meet students from all different parts of the world.

We learn what our students are working on, what excites them about self-driving cars, and about the difference Udacity has made in their lives. It’s wonderful!

If you’re interested in becoming a part of our global Self-Driving Car community, consider enrolling in one of our Nanodegree programs. No matter your skills and experience, we’ve got a program for you!

https://www.udacity.com/course/intro-to-self-driving-cars–nd113https://www.udacity.com/course/intro-to-self-driving-cars–nd113

The Udacity Self-Driving Car European tour continues.

On Thursday evening, March 8, the London Self-Driving Car Meetup will be hosting Udacity and me from 6–9pm at Parkopedia just south of London Bridge.

I’ll be talking about the future of self-driving cars and the future of Udacity. Please come say hello!

Many students describe the Path Planning Project as the most challenging project in the entire Udacity Self-Driving Car Engineer Nanodegree program. This is understandable. Path planning is hard! But it’s not too hard, and I’m going to tell you a bit about the project—and about path planning in general—in this post.

There are three core components to path planning: 1) Predicting what other vehicles on the road will do next, 2) Deciding on a maneuver to execute, in response to our own goals, and to our predictions about other vehicles, and 3) Building a trajectory to execute the maneuver we decide on.

This is a project that provides students a lot of freedom in how to implement their solution. Here are five approaches from our amazing students!

I love Mithi’s series of posts on the Path Planning Project. Her first post covers the project outline and her solution design process. The second post covers her data structures and pipeline. The third and final post dives into the mechanics and math required to actually produce a path. This is a great series of posts for anybody thinking about building a path planner.

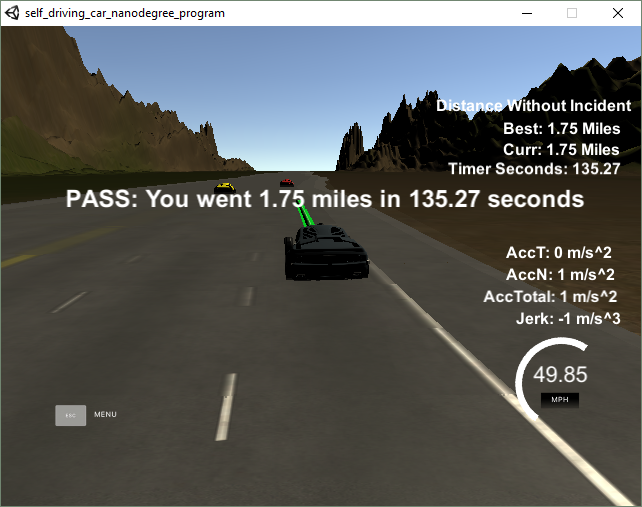

The goal is to create a path planning pipeline that would smartly, safely, and comfortably navigate a virtual car around a virtual highway with other traffic. We are given a map of the highway, as well as sensor fusion and localization data about our car and nearby cars .We are supposed to give back a set of points

(x , y)in a map that a perfect controller will execute every 0.02 seconds. Navigating safely and comfortably means we don’t bump into other cars, and we don’t exceed the maximum speed, acceleration, and jerk requirements. Navigating smartly means we change lanes when the car in front of us is too slow.

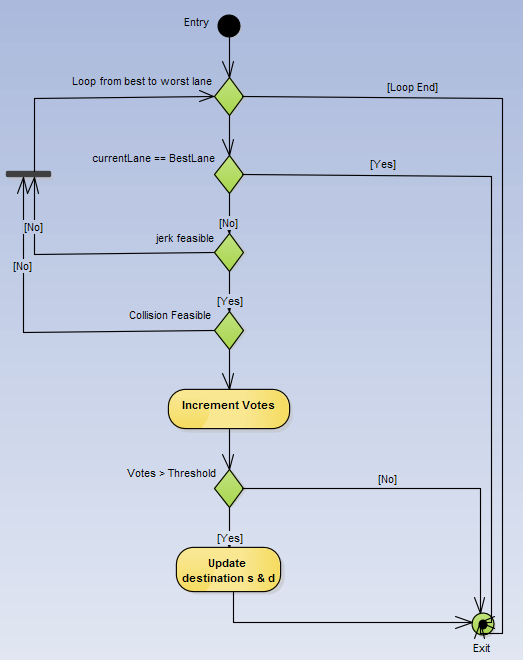

In contrast to Mithi’s articles, which take you through her process of building a path planner, Mohan’s writeup does a great job of describing the final result. In particular, I was interested to read about the voting system he used for deciding on lane changes.

Instead of quoting Mohan, I’ll share the flowchart he built:

This installment of Andrew’s long-running “diary” covers the Path Planning Project at a high level, and details how it fits into the third term of the Nanodegree program. Like his fellow classmates, Andrew also found this to be a challenging project.

I found the path planning project challenging, in large part due to fact that we are implementing SAE Level 4 functionality in C++ and the complexity that comes with the interactions required between the various modules.

Shyam’s post contains a particularly concise 6-point walkthrough of trajectory generation, which is both fundamental to building a path planner, and surprisingly challenging.

The trajectory generation part which is the most difficult is covered as part of the project walk-through by Aaron Brown and David Silver. LINK. They recommend using the open source C++ tk:spline() method to generate a 5th degree polynomial which help minimize jerk while accelerating, decelerating and changing lanes.

Alena touches on several interesting points with her post. She focuses on cost functions, which she identifies as the most important part of the project. The post describes her finite state machine and the associated cost functions in detail, and describes how the car decides when to shift lanes. She also touches on how she merged the two branches of her path planner — one for the Nanodegree project, and one for the Bosch Challenge — to create a more generalized planner.

The most important part of the project is to define cost functions. Let’s say we are on a highway with no other cars around. In such situation, we should stay in the same lane. Constant lane changing is extremely uncomfortable, so my first cost function is change_lane_cost. We penalized our trajectory if we want to change lane. Honestly, I did a small trick for Bosch challenge. I did not penalize the trajectory if I want to move in the middle lane. It gives me more freedom with maneuvers. Otherwise, I can be stuck in the left-most and right-most lanes when my lane and middle lanes are busy.

Seeing our students working through these challenges, experiencing their solutions, and learning about their processes fills me with so much excitement about the future of this field—these students represent the next generation of self-driving car engineers, and based on the work they’re already doing, I am certain they’re going to be making incredible contributions. I am especially moved by their generosity in taking the time to share in such detail the work they’re engaged in, and it’s a real pleasure to share their articles with you.

~

Ready to start working on self-driving cars yourself? Apply for our Udacity Self-Driving Car Engineer Nanodegree program today!

Next month I’ll be checking off a common bucket list item by visiting Michigan in January. Most people go for the weather, but I in fact am going for the North American International Auto Show.

I tease, of course, but I truly am excited to be heading back to Motor City, and especially for America’s largest auto show.

On Wednesday, January 17, I’ll be speaking on a panel at Automobili-D, the tech section of the show, and I’ll be in town with some Udacity colleagues through the weekend.

Drop me a note at david.silver@udacity.com and I’d love to say hello. It’s always amazing to head to the center of the automotive world. In many ways it reminds me of how cool it was to visit Silicon Valley when I was a software engineer in Virginia, living outside the center of the software world.

We’ll be holding at least one and maybe a few events for Udacity students, potential students, and partners, and I’ll be announcing those here as we nail them down.

See you in Detroit!

Earlier this fall I spoke about how self-driving cars work at TEDxWilmington’s Transportation Salon, which was a lot of fun.

The frame for my talk was a collection of projects students have done as part of the Udacity Self-Driving Car Engineer Nanodegree Program.

So, how do self-driving cars work?

Glad you asked!

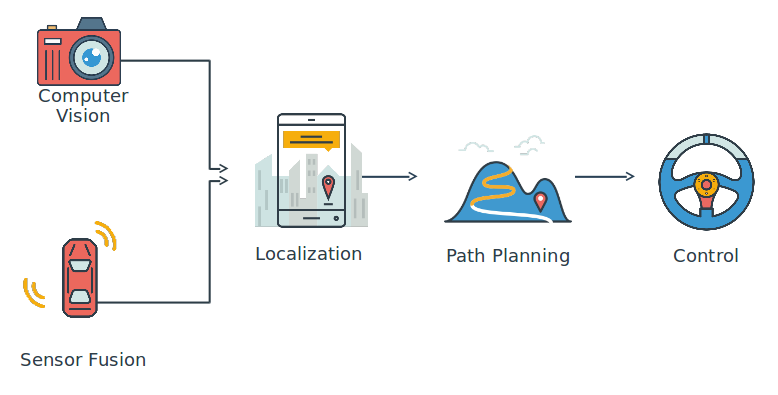

Self-driving cars have five core components:

Computer vision is how we use cameras to see the road. Humans demonstrate the power of vision by handling a car with basically just two eyes and a brain. For a self-driving car, we can use camera images to find lane lines, or track other vehicles on the road.

Sensor fusion is how we integrate data from other sensors, like radar and lasers—together with camera data—to build a comprehensive understanding of the vehicle’s environment. As good as cameras are, there are certain measurements — like distance or velocity — at which other sensors excel, and other sensors can work better in adverse weather, too. By combining all of our sensor data, we get a richer understanding of the world.

Localization is how we figure out where we are in the world, which is the next step after we understand what the world looks like. We all have cellphones with GPS, so it might seem like we know where we are all the time already. But in fact, GPS is only accurate to within about 1–2 meters. Think about how big 1–2 meters is! If a car were wrong by 1–2 meters, it could be off on the sidewalk hitting things. So we have much more sophisticated mathematical algorithms that help the vehicle localize itself to within 1–2 centimeters.

Path planning is the next step, once we know what the world looks like, and where in it we are. In the path planning phase, we chart a trajectory through the world to get where we want to go. First, we predict what the other vehicles around us will do. Then we decide which maneuver we want to take in response to those vehicles. Finally, we build a trajectory, or path, to execute that maneuver safely and comfortably.

Control is the final step in the pipeline. Once we have the trajectory from our path planning block, the vehicle needs to turn the steering wheel and hit the throttle or the brake, in order to follow that trajectory. If you’ve ever tried to execute a hard turn at a high speed, you know this can get tricky! Sometimes you have an idea of the path you want the car to follow, but actually getting the car to follow that path requires effort. Race car drivers are phenomenal at this, and computers are getting pretty good at it, too!

The video at the beginning of this post covers similar territory, and I hope between that, and what I’ve written here, you have a better sense of how Self-Driving Cars work.

Ready to start learning how to do it yourself? Apply for our Self-Driving Car Engineer Nanodegree program, or enroll in our Intro to Self-Driving Cars Nanodegree program, depending on your experience level, and let’s get started!

Udacity and Infosys just announced a partnership to train hundreds of Infosys’ top software engineers in autonomous vehicle development.

Quoting Infosys President Ravi Kumar:

Udacity and Infosys are uniting the elements of education and transformative technology in this one-of-a-kind program. Trainees, with the first 100 selected through a global hackathon in late November, will immerse themselves in autonomous technology courses that require hands-on training to simulate real-life scenarios. By the end of 2018, Infosys will have trained 500 employees on the spectrum of technologies that go into building self-driving vehicles, and in doing so will help to evolve the future of transportation for drivers, commuters and even mass transit systems.

And Udacity CEO Vishal Makhijani:

This program will be part of Udacity Connect, which is Udacity’s in-person, blended learning program. Infosys engineers from around the world will participate in Udacity’s online Self-Driving Car Engineer Nanodegree program, and combine one term of online studies with two terms of being physically located together at the Infosys Mysore training facility, where the program will be facilitated by an in-person Udacity session lead.

Two aspects of this partnership are particularly exciting for me. One is simply working with a top technology company like Infosys. When we started building the Nanodegree program, our objective was to “become the industry standard for training self-driving car engineers.” This partnership moves us significantly closer to that objective. We are grateful and excited for the opportunity, and thrilled for the participating engineers.

The other exciting aspect of this partnership is that it will happen in India. The Infosys engineers will fly in from all over the world, but there is something special about conducting the program in Mysore.

For many years autonomous vehicle development has happened in just a few places: Detroit, Pittsburgh, southern Germany. Recently, we’ve seen autonomous vehicle development expand to Silicon Valley, Japan, Israel, various parts of Europe, Singapore, and beyond. Training autonomous vehicle engineers in India expands the opportunities for students worldwide.

7% of students in the Udacity Self-Driving Car Engineer Nanodegree program are from India. The Infosys partnership is an important next step in building a robust pipeline of job opportunities for our students on the subcontinent.

One of the delights of teaching at Udacity is the opportunity to work with world-class experts who are excited about sharing their knowledge with our students.

We have the great fortune of working with Mercedes-Benz Research and Development North America (MBRDNA) to build the Self-Driving Car Engineer Nanodegree Program. In particular, we get to work with Dominik Nuss, principal engineer on their sensor fusion team.

In these two videos, Dominik explains how unscented Kalman filters fuse together data from multiple sensors across time:

These are just a small part of a much larger unscented Kalman filter lesson that Dominik teaches. This is an advanced, complex topic I haven’t seen covered nearly as well anywhere else.

MBRDNA has just published a terrific profile of Dominik, along with a nifty video of him operating one of the Mercedes-Benz autonomous vehicles.

Read the whole thing and learn what it’s like to work on one of the top teams in the industry. Then, enroll in our program (if you haven’t already!), and start building your OWN future in this amazing field!

The guiding star of the Udacity Self-Driving Car Engineer Nanodegree Program is jobs. Everything we do ultimately connects to preparing students to become autonomous vehicle engineers.

For that reason, Udacity has invested heavily in career support for students. Every student has access to optional projects where they can get personalized reviews of résumés and cover letters, as well as guidance for online profiles on sites liked LinkedIn, GitHub, and Udacity’s own Career Portal.

In this lesson, students hear from our Careers Team about the services and extracurricular professional activities that Udacity offers for students.

There are also videos from three of our content partners in the Nanodegree — Mercedes-Benz, NVIDIA, and Uber ATG — explaining what it’s like to work with them, how to get a job with them, and the value the Nanodegree Program provides.

We also have pointers to extracurricular lessons that are available to all Nanodegree students on two topics: “Job Search Strategies”, and “Networking”.

The “Job Search Strategies” lesson covers how to build a résumé and cover letter tailored to a specific job, as well as strategies for finding that job.

The “Networking” lesson offers tips for building your personal brand and developing a network that can push job opportunities in your direction. There are also optional projects through which you can get personal reviews of your GitHub, LinkedIn, and Udacity profiles.